Last updated on 28/06/2025

If you’ve been alive in tech anytime in the past two years, you’ve been told, repeatedly, loudly, often by people who discovered prompt engineering five minutes ago, that large language models are here to revolutionize everything. Your job. Your team. Possibly civilization itself.

This is not that article.

Instead, I want to talk about something much less glamorous and much more useful: using generative AI to get better at the unsexy parts of knowledge work. Not writing code. Not launching autonomous agents that replace deliberate workflows in our products and ourselves. Just the normal stuff: understanding a messy situation, figuring out what to do, and communicating clearly, you know, the bulk of all of our jobs.

I’ve spent the past few months running my own experiments, sometimes useful, often frustrating, on how LLMs can help me research, plan, and write more effectively. I’m not here to sell you a universal playbook. I honestly don’t think on exists. What I can offer are practices that have worked for me, and a nudge to go try them yourself.

Because here’s the first big secret:

This stuff isn’t magic.

It’s a skill. It’s learnable. And yes, sometimes you’ll get back a response so spectacularly off-base that you question your own life choices. That’s not failure, that’s feedback.

Generative AI Doesn’t Care About You (Or Your Outcomes)

Let’s get this out of the way early. If you remember nothing else from this post, remember this:

The tool doesn’t care about your outcomes. It only cares that you keep using it.

That’s it. That’s my one law of generative AI.

The slick on-boarding flows and case studies all suggest LLMs are invested in producing excellent thought work. Yes, but also no. They’re only invested in your engagement, in producing output that you’ll accept, and that will get you to come back later. That’s not exactly the same thing. The burden is on you, to do the thinking, to frame your questions well, to close the loop when things go sideways, and most importantly to examine the output with a critical eye.

If you can accept that upfront, you’ll save yourself a lot of frustration, and you’ll be miles ahead of the folks who keep expecting ChatGPT to channel the ghost of Peter Drucker on the first try.

Why Bother? The Value in Non-Coding Use Cases

Most AI stories in our space fixate on code generation or on replacing bespoke, artisinally hand-crafted workflows with agentic planning that might work most of the time. There’s value there, but it’s user-facing. Getting the full benefit out of those approaches demands intuition about how LLMs work, what goes into a prompt, what information helps, and what distracts.

Sure, you can learn that intuition in your code bases, where it will eventually make it to production, but that’s a live-fire exercise with real consequences for your products and customers.

Instead, let me suggest that you build those instincts in the thought and communication work that scaffolds your technical jobs. Here, the stakes are lower in part because the audience is friendlier (you and your colleagues). In these contexts, a rough first draft doesn’t cause churn or degrade trust. It’s just a draft, one that you’re going to edit.

Think about all the times you need to:

- Summarize research into something legible

- Draft a proposal or executive email

- Reframe a messy Slack discussion into a decision record

- Brainstorm names, outlines, or talking points

- Plan who to meet at a conference and why

These are the kinds of tasks where LLMs shine. Not because they’re replacing your judgment, but because they lighten the cognitive load and surface starting points faster.

Let me give you an example from my own work.

A Tiny Case Study: Planning My Conference Networking

Later this year, I’m heading to KCDC (Kansas City Developer Conference, come see me!). As usual, I have a vague ambition to meet interesting people and maybe stir up business. The thing is “interesting people” is a pretty broad category, and my calendar is always pretty full to start with

So I did what any reasonable person experimenting with LLMs would do: I asked ChatGPT, Who should I meet at KCDC this year? You can probably guess what happened. It gave me a list that was about as useful as a tarot reading. Amusing, but not accurate or informative.

That’s when I remembered: vague inputs yield arbitrary outputs.

So I rephrased:

- I’m an AI strategist interested in how people are talking about AI.

- I want to grow my professional network and drum up business.

- Here are some examples of speakers and topics I find relevant.

The model’s next pass? Still imperfect, but directionally better. I got ideas for what sessions to visit, who to talk to, why, approaches for conversations, and a rough plan for follow-ups.

It wasn’t magic. But it was a head start, one I could refine with my own judgment. Please, please, please never pass the output of these things on without validating it.

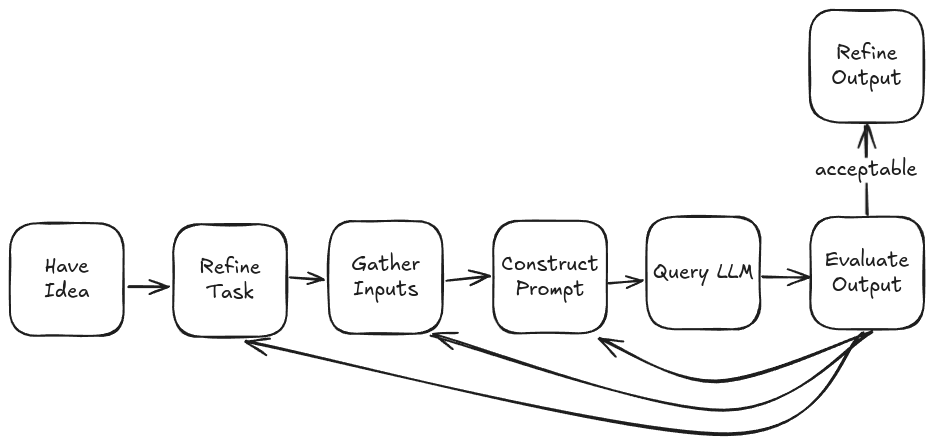

The Workflow: From Barest Idea to Something Useful

If you’re thinking about trying this yourself, here’s a basic pattern I keep coming back to. Feel free to adapt it:

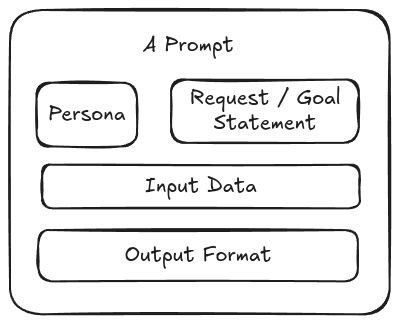

- Start with a persona.

Tell the model who it is pretending to be, an expert, a helpful colleague, a conference organizer. This sets tone and context. - Be explicit about your goals.

What do you actually want to accomplish? “Help me look smart” is fine if that’s the honest answer. - Provide context.

Share any known constraints, examples, or reference materials. The model can’t guess what you already know. - State what information you expect back.

A list? A summary? A table comparing options? - Iterate.

The first output is rarely final. Think of it like a rough draft you’d get from an intern—directionally correct, but in need of polish.

Prompt engineering, regrettably, matters. You don’t need to learn arcane incantations, but you do need to be deliberate. The good news is it’s learnable. You get better with practice.

Bad Output Isn’t a Crisis

This is the other place where people get stuck. They try a prompt once, get something silly (“Here are seven reasons why bananas are relevant to your AI strategy”), and conclude the tool is worthless.

Here’s the reality:

Bad output is an opportunity to improve your process.

Ask yourself:

- Was my question too broad or too vague?

- Did I skip providing examples?

- Did I forget to define the audience or tone?

- Is the model missing relevant background information?

Each failed attempt is a data point. Close the loop, tweak the inputs, and try again.

Where Else Does This Apply?

Once you get comfortable, you’ll find this approach works for more than just conference planning. A few other examples I’ve used personally:

- Turning internal retros into case studies.

Summarize notes, extract lessons, and reframe them for external sharing. - Drafting onboarding materials.

Compile scattered docs into something coherent. - Brainstorming product names.

With style and tone constraints baked into the prompt. - Reshaping research notes into a conference talk proposal.

- Transforming Slack threads into a knowledge base article.

Each of these starts with the same premise: you do the thinking, the model does the heavy lifting of generating drafts and variations.

The Tools I Actually Use

Quick sidebar: the tools themselves don’t really matter. But in case you’re curious, here’s what I keep handy:

- OpenAI (GPT-4).

Cheap paid tier. Surprisingly capable for general text tasks. - Ollama.

Local model hosting. Good for privacy and speed when you don’t want to hit the cloud. - Whisper.

Local transcription to turn my audio rambles into text I can clean up. - Obsidian.

Just a note-taking system, but convenient for process logging.

You don’t need this exact stack. The important part is to build a workflow you’ll actually use.

One More Thing: It’s Still Not Magic

If I sound like a broken record, that’s intentional. Because every time I think I’m past the phase of expecting a miracle, I find myself wishing for one.

The truth is simpler: LLMs are tools. Weird, sometimes brilliant, often frustrating tools. They won’t do your work for you. But they can help you work faster and think more clearly—if you’re willing to iterate.

And maybe, just maybe, Shia LaBeouf was onto something when he screamed “JUST DO IT!” into that green screen. You’re not going to learn this by reading about it. You have to try it.

Want Help Getting Started?

If you’re curious about applying LLMs to your own workflows, or your products, I’m here. Get in touch.